Global Illumination Using 3D Textures

Let's say you have a ray-marched scene and you want to spice it up with some global illumination. What's the first approach you come up with? Perhaps something like this:

- Cast several rays distributed evenly throughout a hemisphere above the surface.

- See if they strike a surface, and if they do, note what color and how strongly illuminated it is, as well as how distant.

- For each of the rays, consider their contribution to the global illumination to be something like color*brightness*distance2.

- Sum those contributions and add the color and brightness to the final color of the surface/pixel.

Additionally, if surfaces have high frequency and high contrast textures, then having simply stochiastic samples will not provide reliable results. Some rays cast through the hemisphere might land in a dark region on an otherwise green hypothetical object. This ray would report that the object was black and not re-emissing any light, though in reality it was casting a gentle green. When rays are strictly stochiastic frame by frame, this can create some slightly noticable and highly irritating flickering. So we have to add another n4 to account for multi-frame averaging or a Gaussian blur to fix this artifacting. That leaves us with an O(n) of n4-- much too slow for real time.

My Rough-and-Ready Approach

Does my technique find a way to optimize around all of this? Not really. It rather is able to sidestep everything by nature of the distance field and the way it is textured.

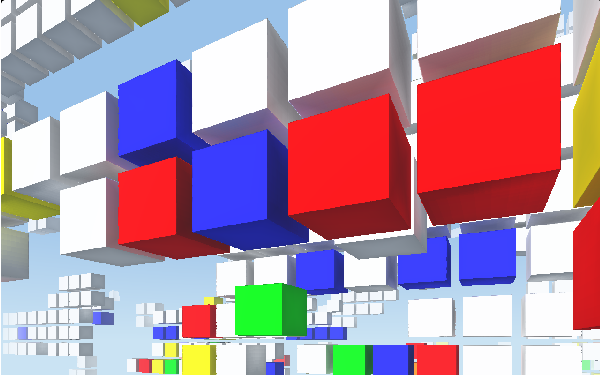

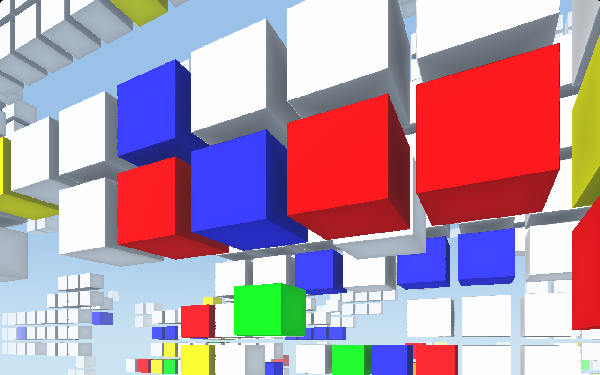

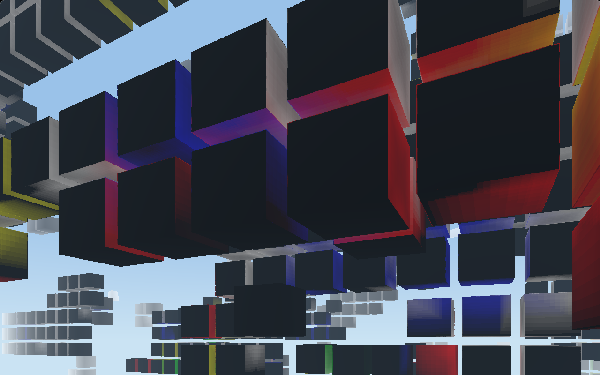

The scene, as you can see, consists of many cubes placed aligned to a grid. This provides us with some assumptions we can leverage. First off, any cube above a surface that may contribute meaningful amounts of secondary illumination will be in a neighboring voxel. If we sample one unit away in any direction, we will be in that neighboring voxel. Secondly, the color of the cube within a voxel is defined in 3D space, and is defined and homogeneousfor the entire voxel, not just at the surface of the cube. This means we can sample well within or above a cube and still get an accurate result.

Using these two realities, we can, instead of marching each ray through the hemisphere, can simply waltz forward by 1 unit and sample existence and color.

What isn't well defined throughout the voxel is the magnitude of primary illumination. Therefore we assume a blanket value for the brightness of each contribution.

The Algorithm

This is pretty straightforward, so I'll just leave some code here. Essentially we use Rodrigue's rotation formula to rotate a vector around the surface normal creating a field of samples along a unit hemisphere. These samples are averaged and returned.

Olinde Rodrigues' vector rotation formula for rotating a vector a

around a vector b t radians.

*/

vec3 rodRot( in vec3 a, in vec3 b, in float t )

{

// Straight from wikipedia.

return normalize(a*cos(t) + cross(b, a)*sin(t) + b*dot(b,a)*(1.0-cos(t)));

}

/*

Marches about a dome above a surface, sampling the texture at each

interval. These samples are weighted based on distance from the original

point, summed, averaged, and returned.

*/

vec3 giColor(in vec3 p, in vec3 n)

{

// Basically what we're doing here is getting the texture of several

// positions above the surface. (Note that the texture color is defined

// per voxel, not per surface point. That is the only reason this works.)

//

// To do that we're going to rotate the surface normal off-axis,

// and then Rodrigues' rotate it around the original normal, sampling

// as we go. This creates sort of a unit hemisphere above the surface.

// Is sampling only at the unit distance a robust plan? Nope. Does it

// provide plausible results? Yep.

// The vector that is rotated around the vector.

vec3 r = vec3(0.0);

// A place to accumulate the GI contributions.

vec3 gi = vec3(0.0);

// A vector tangent to the surface. A vector crossed with a vector that

// is not that vector results in a vector tangent to both.

vec3 t = normalize(cross(n, n+n.zxy));

// So here we get orbit height and radius, leaning the normal over

// progressively farther. Here we lean up to 4PI/10, because if we

// lean over to PI/2, we get some nasty artifacting since we are sliding

// along the surface.

for(float a = .0; a < 1.25664; a += .125664)

{

// Lean over the surface normal by rotating it around

// a vector that is orthogonal to it.

// Jenny are you okay Jenny?

r = rodRot(n, t, a);

// March around that orbit.

for(float b = 0.0; b < 6.2832; b += 1.25664)

{

// Mix it with the environment map based on the distance

// to the other surface at that point.

gi += mix(color(p+r), vec3(0.0), clamp(pow(dist(p+r),2.0), 0.0, 1.0) );

// Going through the extra effort to consider distance showed

// very little effect.

//gi += color(p+r);

r = rodRot(r, n, 1.25664);

}

}

return gi;

}